‘From The Ground Up’ is a new report and research-based framework designed in line with Avallain Intelligence, our strategy for the responsible use of AI in education, and built with and for educators and institutions.

Avallain and Educate Ventures Research Collaborate to Deliver Robust, Real-World Guidance on Ethical AI in Education

St. Gallen, June 2025 – As generative AI transforms classrooms and educational workflows, clear, actionable ethical standards have never been more urgent. This is the challenge addressed in ‘From the Ground Up: Developing Standard Ethical Guidelines for AI Implementation in Education’, a new report developed by Educate Ventures Research in partnership with Avallain.

Drawing on extensive consultation with educators, multi-academy trusts, developers and policy specialists, the report introduces a practical framework of 12 ethical controls. These are designed to ensure that AI technologies align with educational values, enhance rather than replace human interaction and remain safe, fair and transparent in practice.

Unlike abstract policy statements, ‘From the Ground Up’ bases its guidance in classroom realities and product-level design. It offers publishers, institutions, content service providers and teachers a path forward that combines innovation with integrity.‘Since the beginning, we have believed that education technology must keep the human element at its core. This report reinforces that view by placing the experiences of teachers and learners at the centre of how we build, evaluate and implement AI. Our role is to ensure that innovation never comes at the cost of well-being, agency or trust, but instead strengthens the human connections that make learning meaningful.’ – Ursula Suter and Ignatz Heinz, Co-Founders of Avallain.

A Framework Informed By The People It Serves

Developed over six months through research, case analysis, and structured stakeholder engagement, the report draws on input from multi-academy trust leaders, expert panels of educators, technologists and AI ethicists.

The result is a framework of 12 ethical controls:

- Learning Outcome Alignment

- User Agency Preservation

- Cultural Sensitivity and Inclusion

- Critical Thinking Promotion

- Transparent AI Limitations

- Adaptive Human Interaction Balance

- Impact Measurement Framework

- Ethical Use Training and Awareness

- Bias Detection and Fairness Assurance

- Emotional Intelligence and Well-being Safeguards

- Organisational Accountability & Governance

- Age-Appropriate & Safe Implementation

Each control includes a definition, challenges, mitigation strategies, implementation guidance and relevance to all key education stakeholders. The result is a practical, structured set of tools, not just principles.

‘This report exemplifies our mission at Educate Ventures Research and Avallain: to bridge the gap between academic research and real-world educational technology. By working closely with teachers, school leaders and developers, we’ve created ethical controls that are both grounded in evidence and practical in use. Our goal is to ensure that AI in education is not only effective, but also transparent, fair and aligned with the human values that define great teaching.’ – Prof. Rose Luckin, CEO of Educate Ventures Research and Avallain Advisory Board Member.

Recommendations That Speak To Real-World Risks

Some of the report’s most relevant insights include:

User Agency Preservation

AI should support, not override, the decisions of teachers and the autonomy of learners. Design should prioritise flexibility and transparency, allowing human control and informed decision-making.

Cultural Sensitivity and Inclusion

The report calls for continuous audits, bias detection and cultural representation in AI training data and outputs, with robust mechanisms for local adaptation.

Transparent AI Limitations

AI systems must explain what they can and cannot do. Visual cues, plain-language disclosures and in-context explanations all help users manage expectations.

Adaptive Human Interaction Balance

The rise of AI must not mean the erosion of dialogue. Thresholds for teacher-student and peer-to-peer interaction should be built into implementation plans, not left to chance.

Impact Measurement Framework

The report calls for combining short-term performance data and long-term qualitative indicators to assess whether AI tools genuinely support learning.

Relevance Across The Education Ecosystem

For Publishers

The report’s recommendations align closely with educational publishers’ strategic goals. Whether using AI to accelerate content production, localise materials, or personalise resources, ethical deployment requires more than efficiency. It requires governance structures that protect against bias, uphold academic rigour and enable human review. Solutions like Avallain Author already embed editorial control into AI-supported workflows, ensuring quality and trust remain paramount.

For Schools And Institutions

From primary schools to higher and vocational education providers, the pressure to adopt AI is growing. The report provides practical guidance on how to do so responsibly. It outlines how to set up oversight mechanisms, train staff, communicate transparently with parents and evaluate long-term impact. For institutions already exploring AI for tutoring or assessment, the controls offer a roadmap to stay aligned with safeguarding, inclusion and pedagogy.

For Content Service Providers

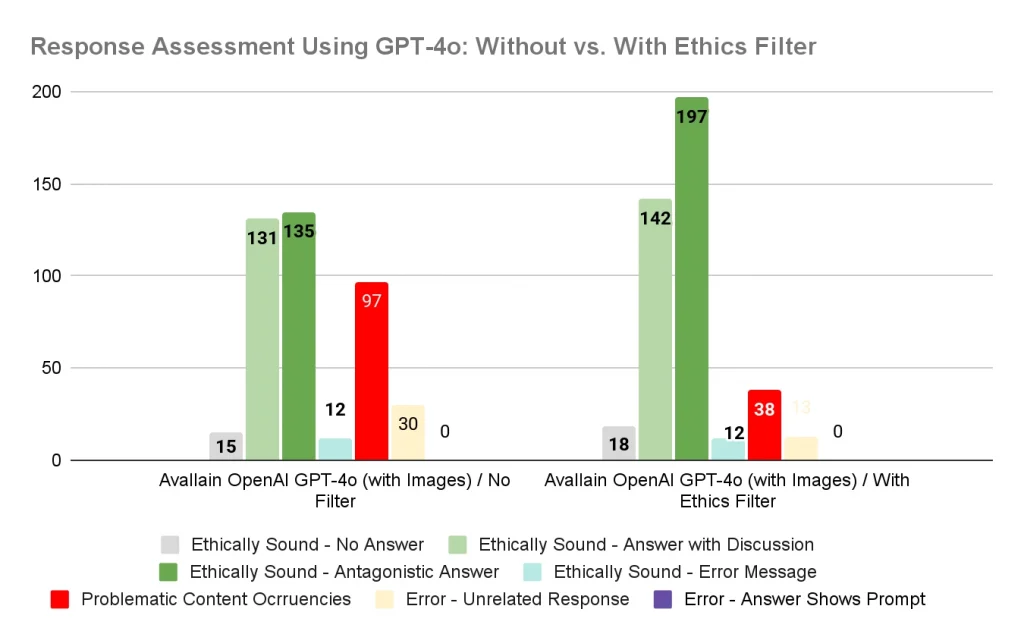

Agencies supporting publishers and ministries with learning design, editorial production and localisation will find clear implications throughout the report. From building inclusive datasets to ensuring transparent output verification, ethical AI becomes a shared responsibility across the value chain. Avallain’s technology, driven by Avallain Intelligence, enables these partners to apply ethical filters and maintain editorial standards at scale.

For Teachers

Educators are frontline decision makers. They shape how AI is used in the classroom. The report explicitly calls for User Agency Preservation to be maintained, Ethical Use Training and Awareness to be prioritised and teacher feedback to guide AI evolution. Solutions within Avallain’s portfolio, such as TeacherMatic, are already embedding these principles by offering editable outputs, contextual prompts and transparency in how each suggestion is generated.

The Role Of Avallain Intelligence: Putting Ethical Controls Into Action

Avallain Intelligence is Avallain’s strategy for the ethical and safe implementation of AI in education and the applied framework that aims to integrate these 12 ethical controls. It adheres to principles such as transparency, fairness, accessibility and agency within the core infrastructure of Avallain’s digital solutions.

This includes:

- Explainable interfaces that clarify how AI decisions are made.

- Editable content outputs that preserve user control.

- Cultural customisation features for inclusive learning contexts.

- Bias Detection and Fairness Assurance systems with review mechanisms.

- Built-in feedback loops to refine AI based on classroom realities.

Avallain Intelligence was developed to meet and exceed the expectations outlined in ‘From the Ground Up’. This means publishers, teachers, service providers and institutions using Avallain tools are not starting from scratch but are already working within an ecosystem designed for ethical AI.

The work of the Avallain Lab, our in-house academic and pedagogical hub, continuously informs these principles and ensures that every advancement is grounded in research, ethics and real classroom needs.

‘The insights and methodology that underpin this report reflect the foundational work of the Avallain Lab and our commitment to research-led development. By aligning ethical guidance with practical use cases, we ensure that Avallain Intelligence evolves in direct response to real pedagogical needs. This collaboration shows how rigorous academic frameworks can inform responsible AI design and help create tools that are not only innovative but also educationally sound and trustworthy.’ – Carles Vidal, Business Director of the Avallain Lab.

Download The Executive Version

This is a practical roadmap for anyone seeking to navigate the opportunities and risks of AI in education with clarity, confidence and care.

Whether you are a publisher exploring AI-powered content workflows, a school leader integrating new technologies into classrooms or a teacher looking for trusted guidance, ‘From the Ground Up’ offers research-based recommendations you can act on today.

Click here to download the executive version of the report to explore how the 12 ethical controls can help your organisation adopt AI responsibly, support educators, protect learners and remain committed to your educational mission.

About Educate Ventures Research

Educate Ventures Research (EVR) is an innovative boutique consultancy and training provider dedicated to helping education organisations leverage AI to unlock insights, enhance learning and drive positive outcomes and impact.

Its mission is to empower people to use AI safely to learn and thrive. EVR envisions a society in which intelligent, evidence-informed learning tools enable everyone to fulfil their potential, regardless of background, ability or context. Through its research, frameworks and partnerships, EVR continues to shape how AI can serve as a trusted companion in teaching and learning.

Find out more at educateventures.com

About Avallain

At Avallain, we are on a mission to reshape the future of education through technology. We create customisable digital education solutions that empower educators and engage learners around the world. With a focus on accessibility and user-centred design, powered by AI and cutting-edge technology, we strive to make education engaging, effective and inclusive.

Find out more at avallain.com

_

Contact:

Daniel Seuling

VP Client Relations & Marketing